Venture Bytes #121: Emotional Intelligence is AI’s Missing Layer

Emotional Intelligence is AI’s Missing Layer

AI could beat you at chess, ace your SAT, finish your sentence, and write code, but it couldn’t tell if you were angry, anxious, or about to churn. That emotional blind spot is where most foundation models still break. But with the convergence of multimodal learning, expressive voice interfaces, and contextual modeling, that gap is closing fast.

Recent research underscores just how far AI has come and how far it still has to go. A study by the University of Geneva and the University of Bern, which evaluated six generative AI models including ChatGPT-4, Claude 3.5 Haiku, and Gemini 1.5 Flash on emotional intelligence assessments, showed that AI models achieved an average accuracy of 81%, significantly outperforming the human average of 56%.

But outperforming humans on structured tests is one thing. Translating that into real-world empathy is something else entirely. In practice, these models still struggle with sarcasm, ambiguity, cultural nuance, and context, the very things that make human emotion so complex. Unless models can reason about emotional causes, sarcasm, consequences, and regulation, they will still lack the necessary prerequisites for real-world emotional intelligence. This paradox, machines outperforming humans on emotional intelligence tests while remaining emotionally tone-deaf in practice, illuminates both the opportunity and the challenge ahead.

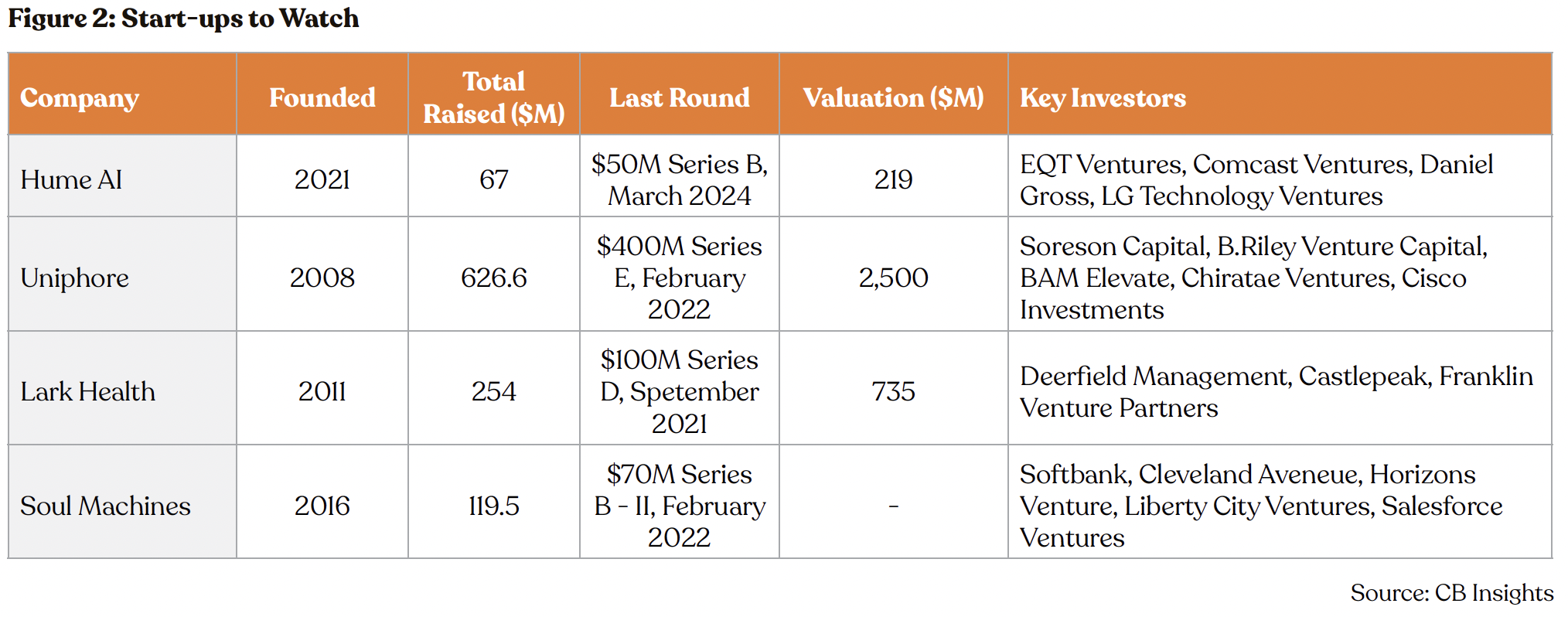

To address this, startups and researchers are building new model architectures and data pipelines. A key idea is multimodality, which combines language with vocal tone, facial cues, and context. For instance, New York-based Hume AI’s Empathic Voice Interface does exactly that, using an empathic LLM that fuses a standard language model with explicit audio-expression measures. This lets Hume’s agent adjust both what it says and how it says it, modulating tone and timing based on user expressions in real time. Unlike traditional models that generate responses based purely on linguistic patterns, models like this weigh emotional context equally with semantic meaning.

A few contextual shifts are making this moment ripe. First, the technology itself has arrived. We have large pretrained models, vast multi-modal datasets (for voice, video, text), and abundant compute power. The availability of large-scale, culturally diverse emotional datasets has finally reached the threshold needed for robust model training. For instance, Hume AI alone has trained on data from millions of human conversations across 30+ countries, capturing emotional expressions that vary dramatically across cultures. Researchers are also increasingly looking to integrate markers such as heart rate variability, skin conductance, or facial electromyography to boost emotion recognition accuracy.

Also, emotional intelligence is becoming a business lever. Companies have realized that emotionally aware AI can directly improve customer retention, care outcomes, and brand trust. What used to be considered “soft” UX is now measurable. For example, tone-matching in customer support improves customer satisfaction scores, and empathy in healthcare increases adherence.

While empathic AI is critical to a wide range of industries, it is particularly important in sectors such as healthcare, customer support, and education, where trust, empathy, and emotional nuance are core to service delivery. AI models that can interpret and respond to human emotions are fast becoming a baseline expectation in these industries. Consider the $50 billion customer service industry, where a cold or robotic response can ruin a customer relationship. People often don’t just want the right answer, but they also want to feel understood. If AI is going to handle more of these interactions, it needs to sound human and emotionally in tune.

Empathic AI is a growth driver in customer service. Companies that prioritize customer emotion outperform competitors by 85% in sales growth and more than 25% in gross margin, according to McKinsey. And that’s exactly why the gap between AI’s emotional IQ and its emotional impact matters so much. Players like Uniphore, leveraging vocal emotion analysis at scale, are pushing the boundaries of empathic interfaces.

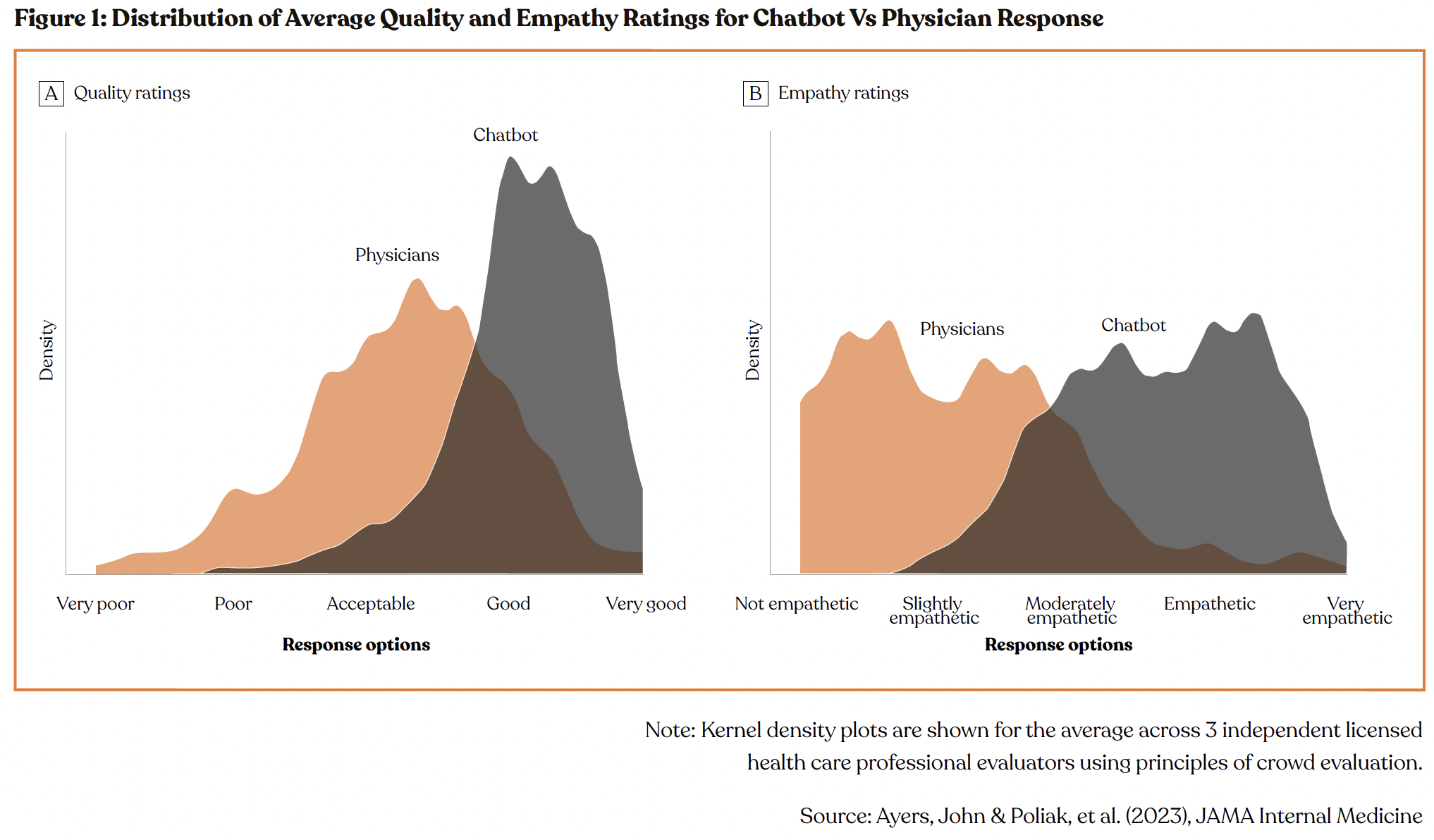

Empathic AI is starting to see real promises in the healthcare sector, which is facing rising patient complexity, staffing shortages, cost pressures, and growing demand for quality care. A recent study in JAMA Internal Medicine compared responses from doctors on Reddit’s r/AskDocs with those from OpenAI’s GPT-3.5. Surprisingly, the chatbot’s replies were rated as more empathetic and more helpful than the doctors’. That’s a big deal, suggesting AI’s ability to beat humans at the emotional part of care, not just the technical side.

Lark Health is another strong example. Its AI system exchanged around 400 million text messages with patients in a single year, something that would have taken nearly 15,000 full-time nurses to do manually. That kind of scale has allowed Lark to become a covered benefit under health plans serving 32 million people. So far, its AI nurse has supported about 2.5 million patients with chronic conditions.

Ellipsis Health also aims to fill similar gaps in care management by providing empathetic, high-quality care management, especially in mental health, where there aren’t enough professionals to meet demand. Nearly 1 billion people around the world were living with a mental disorder as of 2019, according to the World Health Organization, and that number is likely higher by now. Its emotionally intelligent AI Care Manager Sage uses voice analysis as a biomarker for mental health assessment, particularly for stress, anxiety, and depression.

Startups like Soul Machines are also trying to make AI more emotionally responsive. The company has built digital avatars that show facial expressions and speak in more human ways. In tests, these “digital people” were 92% more effective and 85% more engaging than normal chatbots.

Observability is AI’s Next North Star

Billions have been poured into foundation models. However, very little capital has been invested in ensuring those models function effectively in the real world. History tells us the real value often lies in tools that make those systems usable at scale. Datadog did it for microservices. Snowflake did it for data. The same playbook is unfolding in AI, this time in the form of evaluation and observability.

A recent study from Software Improvement Group, a Netherlands-based provider of software quality assurance and IT consulting services, found that 73% of deployed AI systems suffer from quality issues. These aren’t experimental side projects, but systems used for important tasks such as pricing insurance, approving loans, and summarizing contracts, where accuracy is a must.

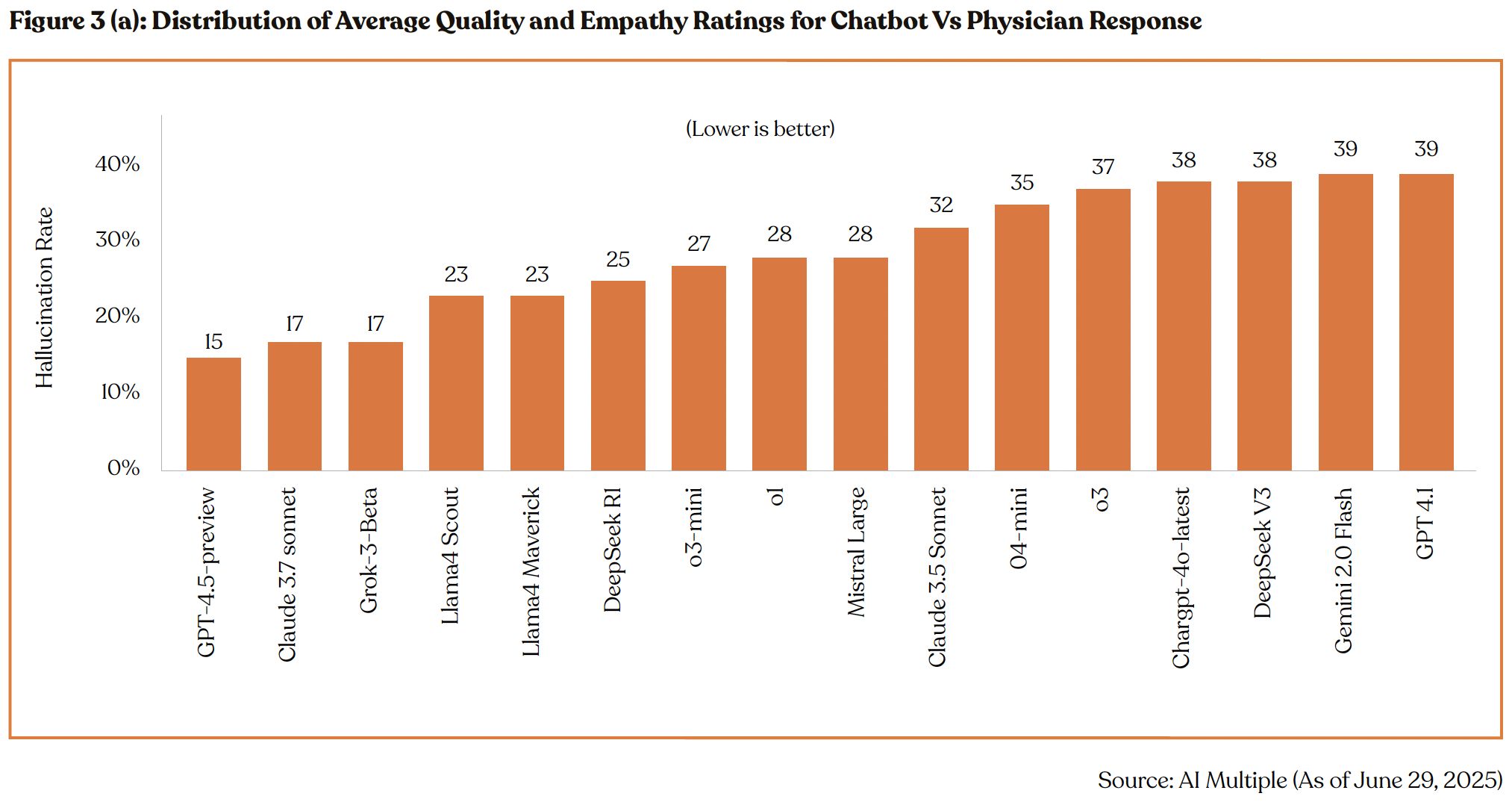

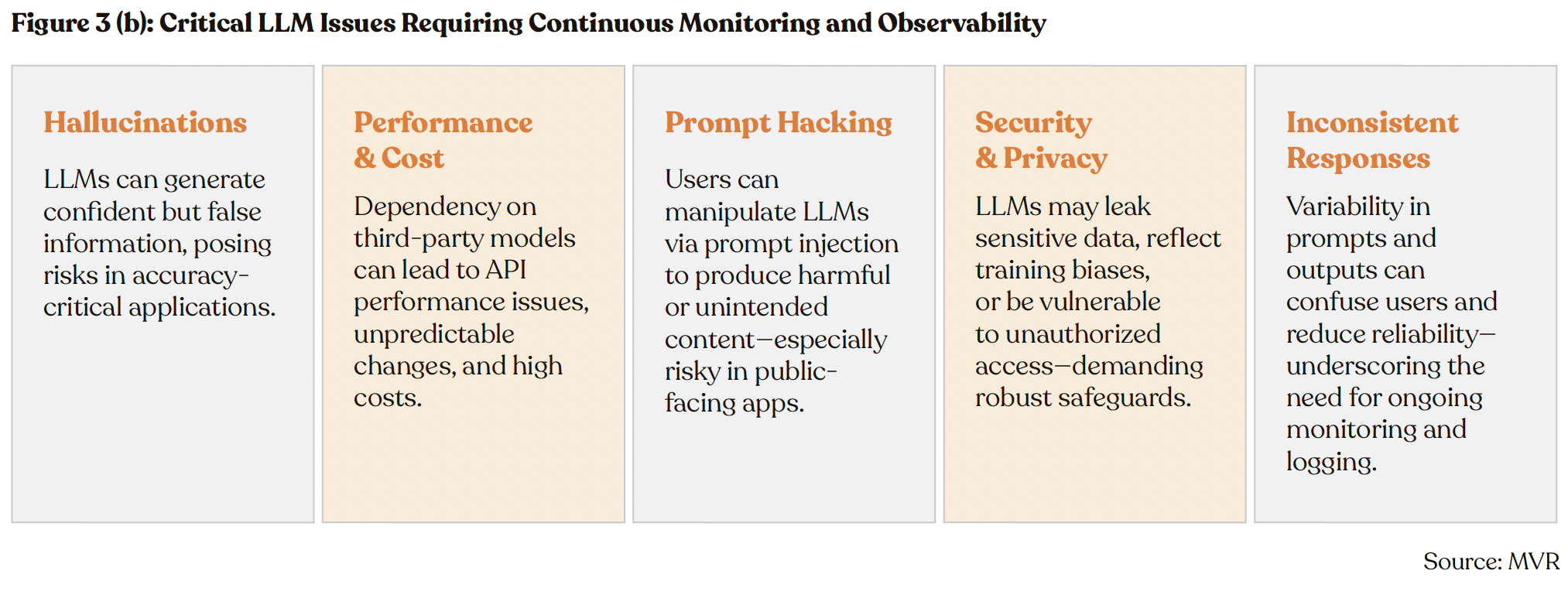

The complexity lies in the fact that the entire premise of traditional software testing collapses when applied to large language models (LLMs). Traditional software testing methods, such as unit tests, regression checks, and static analysis, break down entirely when applied to LLMs. LLMs are probabilistic, multi-output systems. They don’t fail the same way twice and can’t be debugged by stepping through code or reading logs. Instead, it requires a layer of continuous, context-aware evaluation that can catch failure modes before they scale.

Against this backdrop, AI evaluation and observability stand out as high-growth opportunities. With Fortune 500 enterprises rapidly expanding their AI budgets, growing 150% YoY as of February 2025 (per Software Oasis), and global GenAI spend projected to hit $644 billion in 2025 (Gartner), the stakes are escalating. If even 10% of this budget is allocated to LLM observability and evaluation, it implies a $64 billion addressable market.

Compounding the issue of models not functioning effectively is the growing reliance on synthetic datasets, or trained and evaluated on data generated by other models. This creates a closed feedback loop, where models learn to optimize for artificial patterns rather than real-world complexity. According to OpenEvals research from Arize AI, LLMs perform significantly worse on synthetic versus real-world datasets, amplifying the potential for blind spots in enterprise use.

These shortcomings have real economic consequences. Gartner estimates that 40% of agentic AI projects will be abandoned by 2027, citing reliability and governance gaps. In enterprise settings, even a single undetected model failure, like misclassifying a loan application, hallucinating in a legal co-pilot, or reinforcing bias in a customer support agent, can cost millions.

For investors, this signals two things. First, a structural bottleneck that’s suppressing AI’s return on investment at scale. Second, an emerging, inevitable demand curve for infrastructure that restores reliability, trust, and visibility in production systems. Investor focus is also shifting from the lower half of the AI value chain (hardware, hyperscalers, and foundational models), which is reaching a saturation point, to the upper half, which includes customer-facing applications. In this context, AI evaluation and observability represent one of the most prominent and investible areas in the stack.

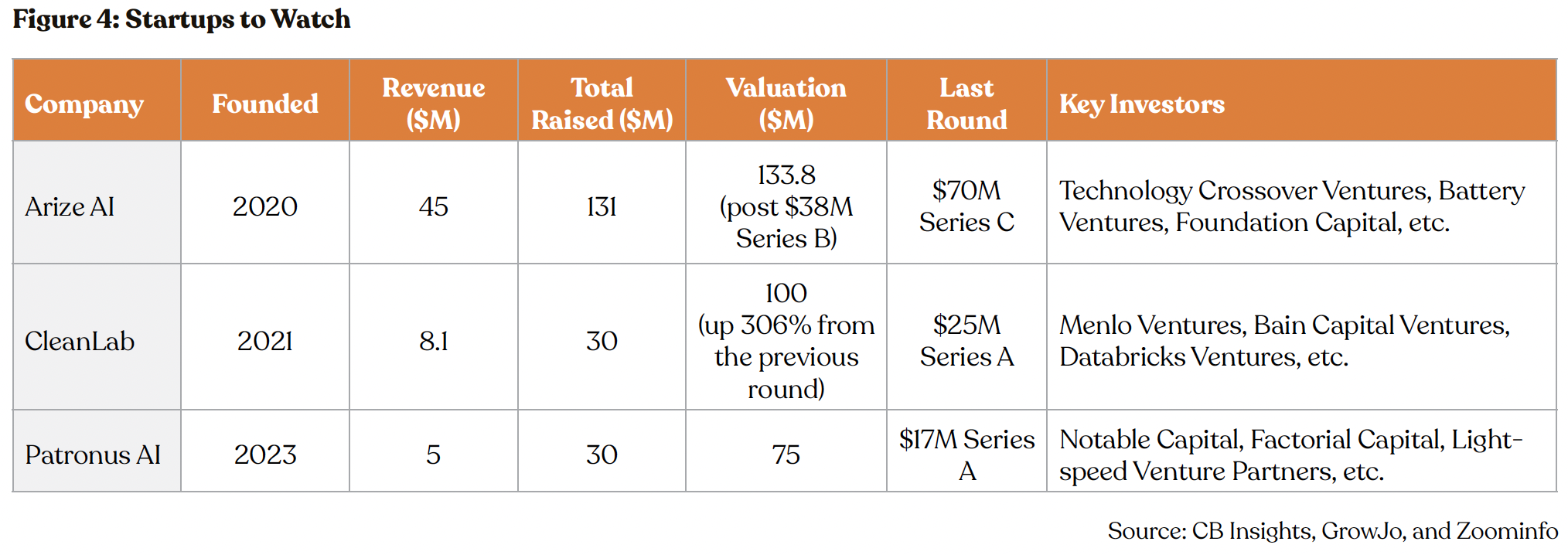

Startups such as Arize AI, Patronus AI, and Cleanlab are emerging as the default toolkit for Fortune-500 pilots and are poised to become highly valuable companies in the future. Such startups will benefit from the rapid AI Adoption, as 68% of companies plan to invest $50–$250 million in generative AI in 2025, per Arize AI. Additionally, enterprise AI spend is growing 75% year-on-year, per Saastr.

Arize AI, based in California, offers a platform to integrate development and production workflows, offering real-time analytics to detect and resolve issues quickly, making it an essential tool for AI-driven enterprises. The company serves clients including Booking.com, Condé Nast, Duolingo, Hyatt, PepsiCo, Priceline, TripAdvisor, Uber, and Wayfair, among hundreds of others. The platform processes 1 trillion spans, conducts 50 million evaluations and has 5 million downloads per month, reflecting its widespread adoption.

Cleanlab, also California-based, is already in use by over 10% of Fortune 500 companies, including AWS, JPMorgan Chase, Google, Oracle, and Walmart. Clean Lab’s automated approach to data curation positions it as a critical player in the AI observability market, which is projected to grow at a CAGR of 22.5%, reaching $10.7 billion by 2033, per Market.us.

Patronus AI offers the industry’s first multimodal LLM-as-a-Judge, a tool built to evaluate AI systems that analyze images and generate text. E-commerce leader Etsy has adopted this technology to validate the accuracy of image captions across its marketplace of handmade and vintage products.

What’s a Rich Text element?

Heading 3

Heading 4

Heading 5

The rich text element allows you to create and format headings, paragraphs, blockquotes, images, and video all in one place instead of having to add and format them individually. Just double-click and easily create content.

Static and dynamic content editing

A rich text element can be used with static or dynamic content. For static content, just drop it into any page and begin editing. For dynamic content, add a rich text field to any collection and then connect a rich text element to that field in the settings panel. Voila!

How to customize formatting for each rich text

Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.