Venture Bytes #122: Speed is Not the Issue in AI, Control Is

Emotional Intelligence is AI’s Missing Layer

Sam Altman recently admitted that seeing GPT-5 in action made him feel “useless”. When the CEO of one of the most powerful AI labs on the planet confesses to feeling obsolete, you know something fundamental has shifted. It also raises the vital question: Is AI moving too fast?

Let’s consider the pace of progress. GPT-3, launched in 2021, struggled with logic puzzles and basic math. GPT-5 can solve graduate-level proofs, generate code that compiles 90%+ on first try, and act as a multi-agent team coordinator. Also, the adoption has surged massively in the last two years, with 99% of Fortune 500 companies integrating AI into business workflows. The world’s largest tech companies are in an arms race to develop models with billions of dollars in compute and armies of researchers. In addition to OpenAI’s GPT-5, Anthropic launched Claude 3.5, which shocked even insiders with its emergent reasoning. Meanwhile, agents are executing tasks on behalf of companies, users, and even other AIs.

So yes, AI is moving fast. But speed is not the enemy. Instead, the phrase “too fast” has become a placeholder, a vague signal of anxiety around AI’s growing power.

However, AI’s breakneck speed is exposing a few issues. The first one is model capability vs. human control. Models are outpacing our ability to interpret, align, or contain them. We’re racing ahead with generative models that exceed human-level capabilities, but we still lack reliable mechanisms to predict or correct their behavior. A group of 40 AI researchers, including contributors from OpenAI, Google DeepMind, Meta, and Anthropic, warned they may be losing the ability to understand advanced AI models.

This creates a ‘capability-control gap’, a dangerous lag where our ability to build exceeds our ability to understand or steer. If AI were a car, we’ve just discovered the turbo button. But we haven’t invested enough in brakes, steering, or airbags.

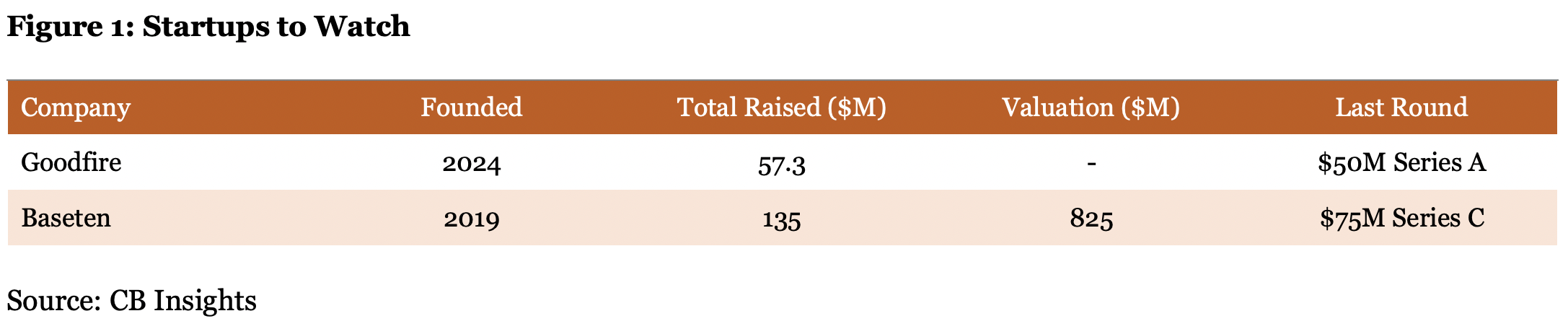

As models become more capable and autonomous, enterprises entrenched in regulated industries (healthcare, finance, defense) will demand trustworthy systems with discernible behaviors. Startups that build interpretability, observability, alignment infrastructure, and governance tooling stand to capture foundational roles in the AI ecosystem. California-based Goodfire is solving one such issue by investing significantly in mechanistic interpretability research. This is a relatively nascent science of reverse engineering neural networks and translating those insights into a universal, model-agnostic platform. Backed by prominent investors such as Lightspeed Venture Partners and Anthropic, the company focuses on AI interpretability, positioning itself at the forefront of a critical aspect of AI safety. As AI systems become more complex and widely deployed, the demand for tools that can make these systems transparent and controllable is likely to grow significantly.

“Nobody understands the mechanisms by which AI models fail, so no one knows how to fix them,” – Eric Ho, co-founder and CEO of Goodfire.

The second major issue is centralization vs. open access. Yes, open-source AI is thriving, with models like those from Mistral rapidly gaining traction. But at the top end, power is concentrating fast. Model weights are becoming increasingly closed, and access to cutting-edge GPUs is tightly controlled. Just five labs, OpenAI, Anthropic, Google DeepMind, Meta, and xAI, now command over 85% of the enterprise LLM market, according to Menlo Ventures, and lead the charge on frontier model innovation. These players are vertically integrating everything, including the foundation models, the data pipelines, the deployment platforms. And they’re spending at a scale no one else can match. Google, for instance, alone plans to invest $75 billion in AI infrastructure in 2025.

This consolidation feeds on itself. The world’s top AI talent continues to flock to these labs. The US brought in over 32,000 foreign AI workers in just the last three years, who now make up nearly 40% of AI roles at top tech firms. Meanwhile, regulators are playing catch-up. Most AI governance frameworks, in both the US and the EU, rely on post-hoc oversight or entity-based rules that struggle to keep up with fast-paced releases and the global footprint of these labs.

Understandably, capital follows power. So far in 2025, 41% of all US VC funding have gone to just 10 companies, eight of which are big AI labs, per Pitchbook. OpenAI raised $40 billion, while xAI and Anthropic collectively raised $20 billion, reinforcing the gravitational pull around a handful of players.

But this dynamic also creates an emerging ‘middle-market’ between hyperscale labs and hobbyist projects. One of the most compelling plays here is Vertical AI for high-value domains. In healthcare, finance, defense, and energy, customers prize accuracy, explainability, and compliance over raw model size. Domain-specific agents and fine-tuned models can outperform general-purpose LLMs when the stakes are high. For middle-market start-ups, this is a defensible niche where barriers to entry are regulatory, trust-based, and industry-specific. A key player in this area is Baseten. Founded in 2019, the company provides a platform for deploying and scaling machine learning models, focusing on AI inference and infrastructure optimization. Rather than chasing ever-larger frontier models, Baseten focuses on making AI deployable in compliance-heavy, high-value verticals. The startup, which was valued at $825 million after its Series C round earlier in 2025, saw revenue grow sixfold in the most recent fiscal year.

Whether this moment is more like 1998’s internet boom, the atomic age, or the Industrial Revolution, one thing is clear that speed alone is not the problem. The bottleneck is our ability to steer, understand, and distribute AI’s benefits wisely.

The SaaS Stack is Compressing

In our recent Venture Bytes, we explored how AI agents are disrupting enterprise software. Early evidence continues to mount, with BetterCloud's 2025 State of SaaS Report showing persistent SaaS rationalization with organizations using fewer SaaS apps for the second straight year. In 2024, companies deployed an average of 106 SaaS tools, down from 112 in 2023 and 130 in 2022, an 18% decline from the peak.

But this SaaS rationalization is just the surface symptom of a deeper architectural transformation. AI agents are poised to compress the traditional three-layer enterprise software stack into a more streamlined model, reshaping value accumulation in the enterprise software market.

The current stack is ripe for compression. For the past decade, enterprise software has operated on a three-layer architecture. With layer 1 (UI/application layer) comprising SaaS companies like HubSpot and Workday; layer 2 (middleware/integration layer) comprising iPaaS players like MuleSoft and Boomi, and layer 3 (infrastructure layer) comprising data platforms like Snowflake, Databricks, and BigQuery that store and process the underlying information.

This stack made sense in a world where humans needed dashboards, forms, and workflows to interact with data. But AI agents fundamentally change the equation. These agents need direct data access and execution capabilities. Agents route around the application layer entirely, pulling data directly from infrastructure providers, stitching workflows via APIs, and executing actions without human interface mediation.

Consider a typical marketing workflow today where a manager logs into HubSpot (which costs around $40,000 annually for Marketing Hub Professional), exports contact data, analyzes it in Excel, creates campaigns, and monitors performance across multiple dashboards. This multi-step process justifies HubSpot's annual subscription fee of thousands of dollars.

Now imagine an AI agent with direct access to data warehouse. It analyzes customer behavior patterns, identifies high propensity segments, generates personalized campaigns, and optimizes performance, all without touching HubSpot's interface. The agent doesn't need HubSpot's UI, reporting capabilities, or workflow orchestration. It just needs clean data and execution APIs. This is the ‘serverless’ analogy in action. Just as serverless computing moved application logic from dedicated servers to cloud functions, agentic AI moves business logic from SaaS applications to intelligent agents that sit closer to the data layer.

The most profound implication is how this reshapes data gravity. In today's SaaS-dominated world, data accumulates in application silos. For example, HubSpot stores marketing data, Salesforce holds sales data, and Workday contains HR data. Integration complexity keeps this data fragmented, which reinforces application lock-in.

AI agents invert this dynamic. They want unified, clean data lakes that enable cross-functional analysis and action. Instead of moving data up to applications, the intelligence moves down to where the data lives. This fundamentally advantages infrastructure providers who can offer agents direct, high-performance access to consolidated datasets.

Databricks understands this shift. With the Mosaic AI platform, they're building the agent execution layer directly into the warehouse. Why would an agent pull data from Databricks, send it to a SaaS application for processing, then return results? It's far more efficient to run the intelligence where the data lives.

This structural shift creates clear winners and losers based on where companies sit in the stack and how defensible their data assets are. Companies like Snowflake, Databricks, and Google BigQuery are perfectly positioned. They already host enterprise data and have the technical capability to add agent execution layers. Snowflake's recent launch of Arctic Agents, AI agents that operate directly within the data warehouse, signals their intent to capture value higher in the stack.

Additionally, data movement platform Airbyte and LangChain, which develops frameworks and tools for building AI applications powered by large language models, stand out. Based in California, Airbyte reached a $1.5B valuation in December 2021, just 18 months after founding. By July 2025, its open-source community had grown to over 230,000 members with 900 active contributors, reflecting both rapid adoption and deep developer engagement.

LangChain, backed by Sequoia, has become the go-to framework for building applications powered by large language models. Valued at $1.1B in its Series B round in July 2025, LangChain counts Klarna, Rippling, and Replit among its users, and generates an ARR of $12–16M. Its traction highlights a growing enterprise preference for modular, developer-friendly AI tooling that accelerates application development and deployment.

We are already seeing early signals of stack compression. Klarna eliminated 1,200 software tools after deploying AI agents. Most of these weren't core systems but SaaS applications that provided interfaces to underlying data sources. The agents could access the same data directly and perform the analysis more effectively.

This dynamic could drive a wave of strategic acquisitions as infrastructure players race to build agent capabilities before losing ground to competitors. Against this backdrop, AI-native startups become lucrative acquisition targets for data platforms to accelerate their move up the stack. Similarly, cloud providers could go after agent orchestration companies to control the execution layer.

However, organizations need to overcome hurdles like data fragmentation and integration complexities to make AI agents deployment successful. The agent’s ‘serverless-like’ shift moves logic closer to data, but it assumes clean and unified datasets, which remain rare in enterprises weighed down by legacy silos. Accordingly, Gartner predicted that over 40% of agentic AI projects will fail by 2027 due to escalating costs, unclear ROI, and inadequate risk controls. As Anushree Verma, senior director analyst at Gartner, notes: "Most agentic AI projects right now are early-stage experiments or proof of concepts that are mostly driven by hype and are often misapplied."

What’s a Rich Text element?

Heading 3

Heading 4

Heading 5

The rich text element allows you to create and format headings, paragraphs, blockquotes, images, and video all in one place instead of having to add and format them individually. Just double-click and easily create content.

Static and dynamic content editing

A rich text element can be used with static or dynamic content. For static content, just drop it into any page and begin editing. For dynamic content, add a rich text field to any collection and then connect a rich text element to that field in the settings panel. Voila!

How to customize formatting for each rich text

Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.