.jpeg)

Venture Bytes #124: What Can Quantum Do That AI Cannot?

What Can Quantum Do That AI Cannot?

In our last Venture Bytes, we discussed how quantum advantage, the point at which quantum computers outperform classical ones on meaningful tasks, is fast approaching. One prominent question we got from readers was that do we really need quantum computing now that we have AI? What problems exist that AI can’t already solve? And more pointedly, does investing billions in quantum computing make sense from a return-on-investment standpoint?

These are the right questions. AI has redefined what we expect from machines like pattern recognition, prediction, and even creativity. But for all its power, it is still a statistical instrument. It doesn’t ‘understand’ nature at a fundamental level but only imitates patterns drawn from it. AI cannot solve optimization problems with absolute certainty, and it cannot simulate quantum mechanical systems. Quantum computing, by contrast, doesn’t predict but computes what nature must do. That profound distinction is the basis for solving problems that AI fundamentally can’t.

The simplest way to understand it is that a classical computer adds power linearly, meaning each new bit gives one extra notch of compute. A quantum computer scales exponentially with every qubit added. For example, three qubits equal eight classical bits, 10 equal 1024, and 20 equal a million. A 300-qubit quantum computer, in principle, may have more compute power than all supercomputers on earth, combined.

But what does that actually mean in practice? Quantum computing’s near-term applications are concentrated in domains where classical systems and AI models reach hard computational limits, problems characterized by quantum complexity or combinatorial explosion.

A key area where quantum computing will create profound impact is the discovery of new drugs and materials. Such discoveries ultimately depend on the ability to understand how molecules interact at the quantum level, how electrons arrange themselves, how bonds form, and how energy transitions occur. Quantum computing directly addresses the inherent limitations of classical computing in computer-aided drug design because molecules operate by quantum rules.

Few examples illustrate the constraints of classical computing better than the pharmaceutical industry’s twenty-year quest to inhibit BACE1, a key enzyme linked to Alzheimer’s disease. Researchers spent over a decade and $15 billion, only for nearly all programs to fail in late-stage trials. The core problem was computational inadequacy. Classical computers, relying on simplified ‘ball-and-spring’ models, cannot simulate the quantum forces dictating how a drug binds to its target. This led to molecular docking simulations that were fundamentally guesswork. Consequently, over 99% of compounds synthesized after these flawed simulations failed in the lab, burning through hundreds of millions of dollars in a cycle of ‘compute-synthesize-test-fail.’

Even AI couldn’t have cracked it. AI excels at finding correlations in existing data and the flaw in the BACE1 program was a profound lack of accurate data. The failure was one of first-principles physics, not pattern recognition.

In 2023, the 20 leading global pharmaceutical companies with the highest R&D spend invested $145 billion in their research and development, according to Deloitte. The pharma R&D failure rate is extremely high, with around 90% of drugs failing during clinical development. Quantum simulations that increase success rates even marginally represent tens of billions in savings. Accordingly, all major pharma companies are building a quantum strategy through partnerships and in-house capabilities.

Another key use-case where quantum can excel and AI can’t is portfolio optimization and risk management. A pension fund with 500 assets trying to optimize allocation while respecting constraints (risk limits, sector exposure, liquidity requirements) faces 2^500 possible portfolio configurations. Classical algorithms, including AI, use heuristics to find "good enough" solutions. But they can't prove they've found the global optimum, and in markets with razor-thin alpha, ‘good enough’ isn't good enough.

On the other hand, quantum annealers and gate-based quantum computers can explore the entire solution space simultaneously, finding provably optimal solutions to complex constrained optimization problems. JPMorgan has published over a dozen papers on quantum algorithms for derivatives pricing and portfolio optimization. Goldman Sachs is developing quantum algorithms for Monte Carlo simulations that could deliver quadratic speedup, reducing million-sample simulations to thousands.

For context, CalPERS $500+ billion in assets on behalf of more than 2 million members. A 10-basis-point improvement in return, enabled by quantum optimization, represents half a billion annually. When multiplied across every major institution, deploying quantum optimization can offer a structural, compounding advantage.

This does not suggest that quantum will replace AI in portfolios, but they are complementary technologies solving different problems. AI will be bigger in aggregate market cap. But quantum will unlock specific, high-margin applications where being the first mover creates structural advantages.

A third critical application where quantum computing offers transformational potential is climate modeling and extreme weather prediction. The Earth's climate system is one of the most complex dynamical systems, involving coupled nonlinear differential equations across atmospheric physics, ocean dynamics, ice sheet behavior, and biogeochemical cycles. Current climate models divide the planet into grids, but computational constraints force these grids to be coarse, typically 100-200 kilometers per cell. At this resolution, critical phenomena like cloud formation, turbulence, and localized extreme weather events cannot be accurately captured.

The computational bottleneck is profound. To double the resolution of a global climate model (halving grid size in all three dimensions plus time), roughly 16 times more compute power will be required. Moving from today's 100km resolution to a 10km resolution that could accurately model hurricanes, thunderstorms, and regional climate impacts would require approximately 100,000 times more computational power than currently available. This is not a problem AI can solve as weather systems follow physical laws encoded in differential equations, not statistical patterns.

Quantum computers can potentially address this through quantum algorithms designed for solving nonlinear differential equations and sampling from complex probability distributions far more efficiently than classical methods. The implications are enormous. More accurate extreme weather warnings could save thousands of lives annually, help communities prepare for floods, droughts, and heat waves, as well as enable more informed policy decisions on infrastructure, agriculture, and disaster preparedness. Insurance companies alone could save billions through improved actuarial modeling of climate risks.

A recent report from the International Chamber of Commerce estimated that climate-related extreme weather events have cost the global economy more than $2 trillion over the past decade. This figure is projected to rise as climate change intensifies. Even modest improvements in prediction accuracy could translate into tens of billions in reduced damages and more effective resource allocation for disaster response.

Several promising private companies are building quantum solutions for these high-impact applications. Massachusetts-based QSimulate specializes in quantum algorithms for chemistry and materials science, with a focus on simulating electron correlation in complex molecules for pharmaceutical applications. Spain-based Multiverse Computing develops quantum-inspired AI model compression technology. The company serves major enterprises like Bosch, Bank of Canada, BBVA, and Moody's to demonstrate quantum advantage in real-world portfolio management with actual market data. Paris-based Pasqal is building quantum processors specifically optimized for solving difficult optimization and simulation problems, including applications in climate modeling and weather prediction using neutral atom technology.

It All Comes Down to Gigawatts

.jpeg)

Two AI-related news made headlines recently. OpenAI entered a 6-gigawatt (GW) agreement with AMD to power its next-generation AI infrastructure across multiple generations of AMD Instinct GPUs. OpenAI also contracted Broadcom to deploy 10GW of custom AI accelerators by 2029. Noticed how the AI chip deals are now being discussed in gigawatts, not number of H100s or H800s? These deals signal a fundamental shift in how the AI industry thinks about scaling infrastructure, it is about energy and energy components as it is about silicon itself.

“We still don’t appreciate the energy needs of this technology… there’s no way to get there without a breakthrough… we need fusion or we need radically cheaper solar plus storage, or something”. – Sam Altman, founder and CEO of OpenAI.

For the past few years, the narrative around AI infrastructure has been dominated by semiconductors. Nvidia became a trillion-dollar company. Nations scrambled to secure chip supply chains. The CHIPS Act allocated billions to domestic semiconductor manufacturing. But here's what's becoming clear, companies can have all the chips in the world, but if there is no electricity to power them, they're just expensive paperweights.

As of early 2024, more than 11,000 data centers were operating worldwide. Most conventional facilities have an average power capacity of just 5-10 MW, while the typical hyperscale cloud data center runs at around 30 MW. But that baseline is being rewritten at astonishing speed.

To meet the AI requirements, datacenters are evolving into industrial-scale energy consumers. The new generation of AI data centers now ranges from 75 MW to 150 MW, with the largest projects under construction designed for 500 MW to 1 GW of power capacity. At those levels, each site consumes as much electricity as a mid-sized city.

The capital commitments reflect this paradigm shift. Microsoft, Meta, Google, and Amazon spent a combined $125 billion on investing in and running AI data centers between January and August 2024, according to a JPMorgan report citing New Street Research. Microsoft and OpenAI are in discussions for Project Stargate — a $100 billion, 5 GW supercomputer complex that would redefine the upper limit of compute infrastructure. Amazon plans to invest $150 billion over the next 15 years in data centers. IEA estimates that the US economy is set to consume more electricity in 2030 for processing data than producing all aluminum, steel, cement and chemicals combined.

While training large models dominate the narrative in AI energy use, inference takes that demand to another level, scaling continuously with every user query, not every model update. Inference accounts for 60-70% of total AI energy consumption versus 20-40% for training, a ratio that's tilting even more dramatically toward inference as models scale to billions of daily queries.

Despite major strides in chip performance, power demand continues to climb. Nvidia's trajectory from the A100's 400W power draw in 2020 to the B200's 1,200W in 2024 demonstrates that power consumption is growing faster than efficiency improvements. In terms of output, A100 delivered 156 TOPS per watt, and the B200 achieves 500 TOPS per watt. While this is a meaningful improvement, it fails to offset the tripling of power consumption. This diminishing returns scenario creates market gaps for alternative architectures, specialized inference chips, and power management solutions that can deliver AI capabilities with dramatically lower energy requirements.

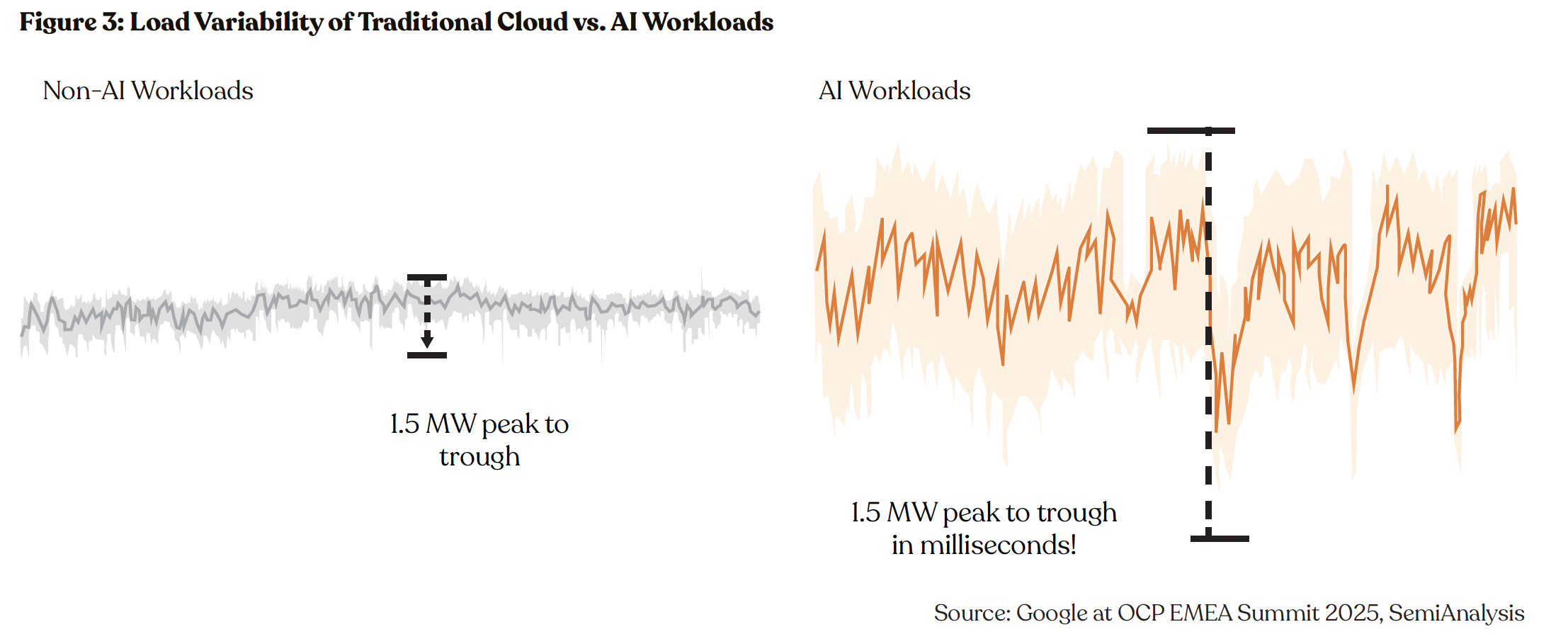

Another increasingly critical dimension of the AI boom lies in the power grid itself. The largest AI labs are racing to build multi-gigawatt-scale datacenters. Not only is the scale massive, but AI training workloads have a very unique load profile, unexpectedly rising and falling from full load to nearly idle in fractions of a second.

Unlike traditional cloud workloads, which have relatively steady and predictable loads, AI training workloads create extreme, instantaneous fluctuations in power draw. Tens of thousands of GPUs can surge from near-idle to full capacity, or drop back again, in fractions of a second. This can happen when GPUs pause for checkpointing, synchronize during collective communication, or when a massive training job starts or stops. This leads to sudden swings of tens of megawatts, equivalent to powering or shutting off an entire industrial complex in an instant.

A research paper on Meta’s LLaMA 3 training offers a glimpse of this challenge. The model was trained on computer clusters packing only 24,576 NVIDIA H100 Tensor Core GPUs, representing roughly 30 MW of IT load (per SemiAnalysis). Even at that scale, the team reported grid instability concerns from power fluctuations. Now imagine the implications as next-generation models train on 100,000 to 1 million GPUs, with total energy draw measured in gigawatts.

The proposed 50% of US datacenters under development are in places where there are already large clusters of datacenters, further risking strain on local grid. The power grids were never designed to handle this pattern. At gigawatt-scale, the worst-case scenario is a blackout for millions of Americans.

The convergence of massive power requirements and grid instability challenges has created distinct opportunities for startups in nuclear energy, particularly small modular reactor (SMR) companies, and grid management. Tech giants have committed over $10 billion to nuclear partnerships, with 22 GW of nuclear projects under development globally. The appeal of SMRs goes beyond their capacity as they solve multiple data center challenges simultaneously. While data centers invest heavily in cooling systems to dissipate GPU heat, SMRs can provide both electricity and process heat for absorption chillers. The waste heat from data centers becomes viable for district heating applications, creating an integrated thermal ecosystem.

Among SMR start-ups, TerraPower and X-energy stand strong. TerraPower raised $650 million in June 2025 from NVIDIA's NVentures, Bill Gates, and HD Hyundai, bringing total private funding to over $1.4 billion. With non-nuclear construction already underway in Wyoming and regulatory approval expected in 2026, TerraPower represents the most advanced SMR deployment timeline for addressing immediate AI infrastructure needs.

X-energy stands out as the most venture-ready SMR company with its Xe-100 reactor technology generating 80 MW per unit and scaling to 960 MW. The company secured $700 million in Series C-1 funding in early 2025, led by Amazon's Climate Pledge Fund, with participation from Citadel founder Ken Griffin, Ares Management, and NVIDIA's venture arm. Amazon has committed to bringing more than 5 GW online by 2039, representing the largest commercial SMR deployment target to date.

While SMRs address supply, managing the unprecedented demand volatility requires intelligence at the grid edge. Utilidata, based in Rhode Island, is addressing this. The company closed a $60.3 million Series C in April 2025, led by Renown Capital Partners with participation from NVIDIA, Quanta Services, and Keyframe Capital. Their Karman platform, built on custom NVIDIA Jetson Orin modules, provides edge AI capabilities directly at smart meters and grid infrastructure.

What’s a Rich Text element?

Heading 3

Heading 4

Heading 5

The rich text element allows you to create and format headings, paragraphs, blockquotes, images, and video all in one place instead of having to add and format them individually. Just double-click and easily create content.

Static and dynamic content editing

A rich text element can be used with static or dynamic content. For static content, just drop it into any page and begin editing. For dynamic content, add a rich text field to any collection and then connect a rich text element to that field in the settings panel. Voila!

How to customize formatting for each rich text

Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.