Venture Bytes #126: Top Three Tech Trends For 2026

Top Three Tech Trends For 2026

AI was the dominant theme in 2025 and is expected to remain so in 2026, all the bubble talk not with standing. In 2026, we expect the technology to transition from a productivity tool to an autonomous system, reshaping how work is executed across digital, physical, and security-critical environments. Against this backdrop, we have identified the following three trends that will shape 2026 and beyond:

- AI Agents Will Become Teammates: Best Investment Idea – Sierra AI

- AI Will Move Deeper onto Physical World: Best Investment Idea – Physical Intelligence

- Counter Drone Tech Will become Essential Military Infrastructure: Best Investment Idea – CHAOS Industries

AI Agents will Graduate from Tools to Teammates

Last year, we predicted a global embrace of AI agents. That has now become unmistakable reality. Salesforce’s Agentforce has crossed 9,500+ paid deals with 330% YoY ARR growth, processing 3.2 trillion tokens through its LLM gateway. ServiceNow has deployed more than 1,000 AI agents across customer accounts, cutting complex case-handling time by 52%. Microsoft’s Copilot Studio is now used by 90% of the Fortune 500, with individual users adopting agentic workflows at 4x the rate of traditional software. The agent wave is no longer emerging — it is scaling.

In 2026, agents will advance beyond reactive, single-task helpers and evolve into autonomous, proactive, and accountable teammates. The early signs appeared in 2025: 24% of executives reported that AI agents already take independent action within their organizations, according to an IBM survey. The foundations of what some are calling agentic organizations are beginning to take shape, where software doesn’t just support work, but actively participates in it.

This shift is powered by reasoning capabilities that have quietly crossed critical thresholds. Foundation models, trained on multimodal data and refined through constitutional and reinforcement learning, now demonstrate autonomous reasoning rather than simple pattern completion. OpenAI’s O1 family has shown robust chain-of-thought reasoning across complex decision domains. Meanwhile, multi-agent orchestration frameworks, from LangGraph to Anthropic’s Model Context Protocol (MCP), enable specialized agents to coordinate, share context, and solve problems collaboratively. The enterprise stack is moving from “AI inside applications” to AI as a network of cooperating digital teammates.

Executives are preparing accordingly. In a recent global survey, nearly 70% of business leaders said autonomous AI agents will transform operations in the coming year. Analysts expect top HR and collaboration platforms to introduce features for managing AI “workers” alongside human staff – scheduling tasks, monitoring performance, and assigning responsibility across hybrid teams.

The adoption curve is steep. Gartner projects that by 2026, 40% of enterprise applications will integrate task-specific AI agents, up from less than 5% in 2025 — a nearly tenfold in just 12 months. And by 2028, Gartner expects 40% of CIOs to require “guardian agents” that autonomously track, oversee, and contain the actions of other agents. This highlights a new frontier: not only building capable agents, but building the infrastructure of accountability that enables them to operate safely at scale.

Best Investment Idea

Founded in 2023, Sierra AI has emerged as one of the fastest-scaling companies in the agentic AI landscape. The company provides a conversational AI platform that enables enterprises to deploy autonomous customer-support agents across channels, industries, and languages. In just 18 months, Sierra reached a $10 billion valuation, with revenue surging from $20 million in 2024 to $98.3 million in 2025, nearly 400% year-over-year growth. Its customer base is weighted toward the enterprise tier, with more than half of clients generating over $1 billion in annual revenue. Sierra’s agents now touch 90% of American consumers in retail and more than 50% of U.S. households in healthcare, underscoring the scale of its penetration.

Sierra’s core differentiation lies in its ‘constellation-of-models’ architecture, which orchestrates more than 15 AI models, each selected for a specific strength, rather than relying on a single monolithic LLM. This modular approach allows Sierra to match the right model to the right task, whether it’s low-latency order management, high-precision fraud detection, or complex case resolution. Automated failover across model providers ensures resilience and uptime, giving customers enterprise-grade reliability while optimizing performance and cost.

AI Will Move Deeper into Physical World

Generative AI’s first chapter delivered digital productivity. The next will push intelligence into the real world. In 2026, Physical AI will emerge as the defining trend, translating digital intelligence into operational impact through robots, drones, and smart machines. As models improve and hardware costs fall, the physical world is becoming the next domain where intelligence will scale. And robots, the most visible embodiment of Physical AI, will sit at the center of this shift.

At CES 2025, NVIDIA underscored the scale of change ahead, declaring that “Physical AI will revolutionize the $50 trillion manufacturing and logistics industries. Everything that moves, from cars and trucks to factories and warehouses, will be robotic and embodied by AI.”

The evidence is already here: 1X’s NEO Home Robot now adapts to unfamiliar environments in real time; Physical Intelligence’s Pi0 became the first robot to fold laundry with human-level dexterity straight from a hamper; and Tesla’s Optimus is executing increasingly complex warehouse tasks. Taken together, these milestones signal a structural shift in how machines perceive, reason about, and act upon the physical world.

Underpinning this progress are vision models that have crossed critical thresholds. Vision-language models (VLMs), trained with native visual grounding, now perform physical reasoning, not just pattern recognition. Meta’s 7B-parameter DINOv3 has shown that self-supervised learning can outperform traditional supervised visual backbones. SAM3 delivers zero-shot instance segmentation at high quality. And these models are becoming plug-and-play: Perceptron’s Isaac 0.1 learns new visual tasks from a handful of prompt examples with no retraining, and still runs at the edge with just 2B parameters.

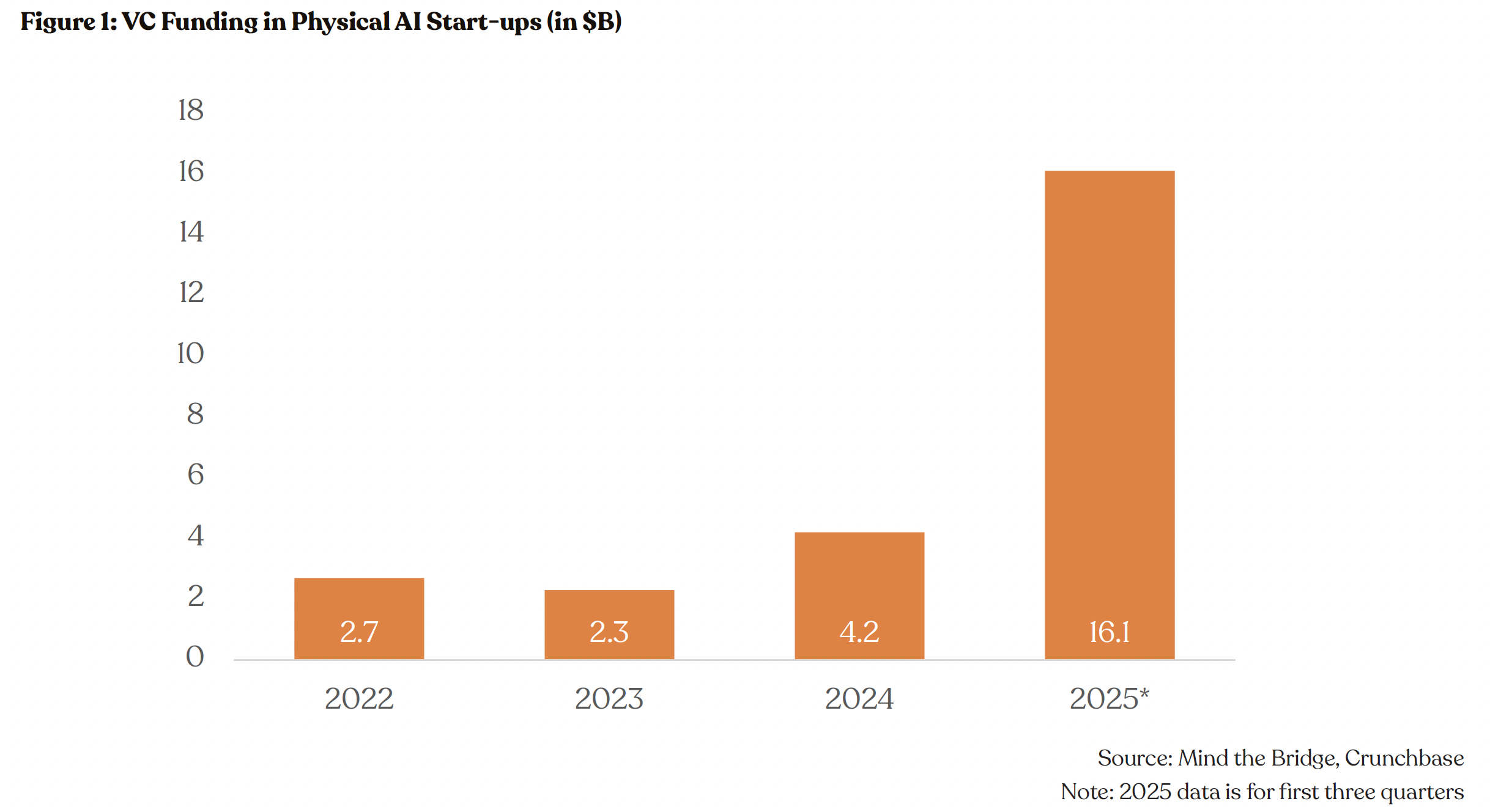

Capital has moved just as quickly. US VC investors deployed more than $16 billion into Physical AI startups in the first three quarters of 2025, nearly 280% more than all 2024 investment, per Crunchbase and Mind the Bridge. This funding is now fueling commercial-scale deployments set for 2026. Meanwhile, early proof points have validated the category. Waymo surpassed 10 million rides and Amazon deployed its millionth robot fleet.

The economics are shifting in parallel. Unitree stunned the market in July 2025 with the G1 priced at $16,000 and the R1 at just $5,900, levels previously assumed to be years away. Goldman Sachs reports a 40% year-over-year decline in manufacturing costs in 2025, dramatically outpacing the expected 15-20% annual drop. Current system prices now range from $30,000–$150,000 depending on configuration, down from $50,000-$250,000 a year earlier.

As a result, industry adoption curves are steepening. TrendForce expects global humanoid robot shipments to grow more than 700% in 2026, topping 50,000 units. Deloitte’s survey of 600 manufacturing leaders shows 80% plan to allocate at least 20% of their 2026 improvement budgets to smart manufacturing, with spending increasingly directed toward agentic AI and physical robotics. The Manufacturing Leadership Council reports that while only 9% of manufacturers deploy Physical AI today, 22% expect to do so within two years. And Goldman Sachs projects 50,000-100,000 global shipments in 2026, with unit economics trending toward $15,000–$20,000 per robot.

Best Investment Idea

While many strong companies are emerging in Physical AI, platform- and software-first players are best positioned to win. One clear standout is Physical Intelligence, a software-first robotics company building general-purpose AI that can operate any robot, for any task.

Founded in early 2024, Physical Intelligence is essentially an ‘intelligence layer’ for the entire robotics ecosystem. The company reached a $5.6 billion valuation in just 20 months, doubling from its $2.8 billion Series A valuation only seven months prior. This pace places it among the fastest-scaling AI startups of the current cycle. The company has raised $1.1 billion across three rounds from top-tier investors including CapitalG, Sequoia, and Jeff Bezos, a strong endorsement of its pursuit of general robotic intelligence.

Most recently, Physical Intelligence unveiled robots capable of real-world learning and continuous improvement, powered by its new π*0.6 model. π*0.6 markedly boosts robot efficiency, doubling throughput on tasks such as espresso making and laundry folding. This leap underscores the company’s core thesis: software, not hardware, will be the primary driver of performance in the Physical AI era.

Counter Drone Technology Will be More Integral to Military Arsenal

Traditional airspace security operated on the assumption that threats would be visible, expensive, and detectable. That assumption is collapsing. As drone proliferation accelerates and critical infrastructure becomes increasingly vulnerable, the economics of airspace defense are inverting. And counter-UAS (C-UAS) systems, the most visible embodiment of this transformation, will sit at the center of this shift.

At the White House Task Force on FIFA World Cup 2026 security in November 2025, federal officials underscored the urgency ahead: "Drones may be used to deploy chemicals, explosive devices, or to conduct surveillance. When we're talking about large crowds gathering, that would be catastrophic." The statement captures a structural vulnerability. The democratization of aerial threats has outpaced defensive capabilities, creating a multi-billion-dollar market responding to existential infrastructure risks.

Drone-related disruptions at European airports quadrupled in 2025 compared to 2024, with Germany recording 192 incidents (up 36% YoY), Denmark reporting 107 illegal flights near airports, and Belgium suffering 10 major incidents in just 8 days in November. Russia launched over 5,600 drones into Ukraine in September 2025 alone—a 38% month-over-month increase. Taken together, these incidents signal a structural shift in how nations must conceptualize airspace sovereignty—no longer as a domain secured by expensive manned systems, but as contested battlespace vulnerable to $500 commercial drones requiring billion-dollar defensive infrastructure.

Underpinning this urgency are threats that have crossed critical accessibility thresholds. Consumer drones costing $200-$2,000 can now carry 5+ pound payloads, fly autonomously via GPS waypoints, and operate beyond visual line of sight using cellular connectivity. Swarm coordination software enables simultaneous multi-drone attacks that saturate traditional air defense systems designed for singular, high-value targets. And encrypted communications make attribution nearly impossible—during the September 2025 Copenhagen incident, Danish Prime Minister Mette Frederiksen called it "the most serious attack on Danish critical infrastructure to date," yet no perpetrator was identified. The asymmetry is profound: a motivated adversary can deploy coordinated drone attacks for under $10,000 that require $50-100 million in counter-drone infrastructure to defend against. The regulatory catalysts are accelerating adoption. In July 2025, the DoD announced creating joint interagency counter-drone task force. The US government also launched a $500 million Counter-UAS grant program, with $250 million reserved specifically for FIFA World Cup 2026 host cities.

The technology maturation explains the investment surge. First-generation C-UAS relied on radio-frequency jamming, which disrupted all wireless communications indiscriminately, unacceptable for civilian airports. Second-generation systems added radar detection but struggled with small drone cross-sections and clutter from birds/weather. Third-generation platforms now integrate AI-powered multi-sensor fusion, combining radar, electro-optical cameras, RF scanners, and acoustic sensors, to achieve 10-minute early warning versus traditional systems' 2–3-minute detection windows. CHAOS Industries' Coherent Distributed Networks (CDN) exemplifies this leap. The technology uses distributed nodes operating with wireless time synchronization to enable coordinated sensing across kilometers, detecting low-altitude threats 10x faster than standalone exquisite radars costing $50+ million.

The battlefield validation is undeniable. Ukraine's military now uses counter-drone interceptor drones costing $2,000-$5,000 to engage Russian Shahed-136 drones priced at $20,000-$38,000, achieving cost-exchange ratios of 4:1 to 10:1 versus traditional air defense missiles at $1.2-$3 million per shot. This economic inversion is forcing NATO allies to rethink air defense doctrine.

Best Investment Idea

CHAOS Industries develops CDN technology for defense and critical infrastructure, specializing in advanced radar systems for drone detection and air defense applications. Founded in 2022, the company raised $275 million Series C in March 2025 and $510 million Series D in November 2025 at a $4.5 billion valuation led by Valor Equity Partners, with participation from 8VC and Accel. CHAOS develops Coherent Distributed Networks powered by advanced radar and wireless time synchronization technology. Unlike traditional "exquisite radars" costing $50-100 million and detecting threats 2-3 minutes before interception, CHAOS's distributed architecture delivers 10-minute early warning at 1/10th the cost through networked sensors operating in perfect coordination.

CHAOS has secured contracts with Eglin Air Force Base and is collaborating across the defense technology ecosystem. The company recently announced a partnership with Forterra to integrate autonomous counter-UAS capabilities, combining CHAOS's detection/tracking with Forterra's AI-powered kinetic neutralization systems.

World Models Are AI’s Next Moonshot

For all their eloquence, today's large language models (LLMs) are like brilliant scholars trapped in a library. They can discuss the world endlessly, but they've never actually experienced it. They don't understand that a cup falls when you let it go, that shadows follow objects, or that you can't walk through walls. This fundamental gap between linguistic fluency and physical understanding is why the AI industry's smartest minds are now racing toward a new paradigm: world models.

Unlike LLMs, which excel at statistical patterns matching across text, world models construct internal representations of environments and predict how they evolve over time. Rather than forecasting the next token in a sequence, they anticipate future states in the real world, simulating movements, collisions, object permanence, gravity, and causality. They do this by learning from multimodal data to build dynamic, predictive understandings of physical environments.

Building world models is not a new ambition. As far back as the 1940s, early cognitive scientists speculated that intelligent beings might function by carrying internal models of the world – mental simulations that let them test outcomes before acting. In the early days of AI, this idea briefly flourished through symbolic ‘block world’ systems that answered physical reasoning questions. But by the 1980s, such handcrafted models were seen as impractical, and AI turned toward data-driven pattern recognition. Now, that ambition is resurfacing, with multimodal inputs (videos, images, 3D scans, sensor feeds etc.), transformer-scale architectures, and planetary-scale compute.

The shift is attracting AI's biggest names. AI pioneer Fei-Fei Li's World Labs just announced Marble, its first commercial world model. World Labs’ research is focused on bringing AI systems closer to human-level spatial understanding, with applications expected in areas including robotics, autonomous systems, industrial automation and gaming. The company’s work is centered on LWM technology, which trains AI to understand space, movement and physical context rather than only using text-based reasoning. Yann LeCun, Meta’s chief AI scientist and Turing Award winner, is also reportedly preparing to launch a world model startup after leaving Meta.

“…within three to five years, this [world models, not LLMs] will be the dominant model for AI architectures, and nobody in their right mind would use LLMs of the type that we have today,” -Yann LeCun, Meta’s chief AI scientist and Turing Award winner.

Among incumbents, Google DeepMind is pioneering research with Genie 3, which generates open-world virtual landscapes from text prompts and allows real-time interaction. Unlike video generation models, Genie 3 renders frame-by-frame while considering past interactions to maintain environmental consistency.

Meta is developing Video Joint Embedding Predictive Architecture (V-JEPA) model trained on raw video to replicate how children learn passively by observing the world. NVIDIA views world foundation models as critical to future growth, offering the Cosmos platform for accelerated physical AI development and its Omniverse simulation environment.

OpenAI has positioned its video model Sora as a world simulator, suggesting that scaling video generation represents a path toward general-purpose simulators of the physical world. The company believes this capability will be "an important milestone for achieving AGI". Chinese tech giants like Tencent and ambitious newcomers like the UAE's Mohamed bin Zayed University are joining the chase.

The commercial upside of world models appears massive with robotics representing the first near-term commercial opportunity. World models give robots the ability to understand spatial structure, transfer skills across environments, and plan multi-step tasks in simulation before executing them in the physical world. NVIDIA’s Cosmos platform, for example, trains robotic systems inside photorealistic digital environments. Additionally, companies like Wayve and Waabi are leveraging similar simulation capabilities to accelerate development of autonomous driving systems.

Autonomous vehicles stand to benefit from world models through pre-labeled synthetic data generation, diverse scenario simulation (traffic patterns, weather, pedestrian behaviors), and sensor fusion training. Chinese automakers are accelerating deployment: Huawei's Qiankun ADS 4.0, powered by a world model architecture, began appearing in vehicles in September. Nio's World Model 2.0 can simulate 216 potential scenarios within 100 milliseconds, selecting optimal paths through algorithmic filtering. According to Frost & Sullivan, more than four-fifths of autonomous driving algorithms now use world models for auxiliary training, reducing costs by nearly 50% and improving efficiency by around 70%.

Gaming and interactive entertainment represent another near-term commercialization pathway. The gaming industry, currently valued at around $300 billion (per Grand View Research), is moving quickly to adopt world models. Google’s Genie 3 can now generate complete 3D playable game worlds directly from text prompts, assembling environments and interactions dynamically. Meanwhile, companies like Runway and Rosebud are building layers that incorporate generative gameplay logic directly atop world-model outputs.

However, the technology still faces material challenges. The most pressing is data availability. While LLM developers scraped most of their necessary training data from the internet, world models require extensive multimodal information that isn't as easily accessible or consolidated. As Encord president and co-founder Ulrik Stig Hansen notes, “Developing world models requires extremely high-quality multimodal datasets at massive scale to accurately reflect real-world perception and interaction.” Based in London, Encord provides one of the most extensive open-source resources in the field, offering more than one billion pairs of images, videos, audio, text, and 3D point clouds, as well as 1+ million curated human annotations.

Another critical obstacle is the sheer computational power required to build physical AI systems. Building physical AI models requires petabytes of video data and tens of thousands of compute hours to process, curate, and label. Reliability challenges also persist with generative models still prone to ‘hallucinations.,’ For world models to be trusted in high-stakes applications like autonomous vehicles or robotic surgery, outputs must be verifiable and uncertainty must be quantified.

Finaly, there is also the persistent gap between simulation and reality. To become operationally useful, world models must anchor their physics engines and environmental dynamics in highly accurate real-world priors.

What’s a Rich Text element?

Heading 3

Heading 4

Heading 5

The rich text element allows you to create and format headings, paragraphs, blockquotes, images, and video all in one place instead of having to add and format them individually. Just double-click and easily create content.

Static and dynamic content editing

A rich text element can be used with static or dynamic content. For static content, just drop it into any page and begin editing. For dynamic content, add a rich text field to any collection and then connect a rich text element to that field in the settings panel. Voila!

How to customize formatting for each rich text

Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.